Interesting LLM Developments in 2025

The last few months have been tumultuous for AI, with Deepseek’s R1 demonstrating how Reinforcement Learning can bring out enough reasoning power from smaller models to compete with models 10 times as expensive to run. Not long after this release a 3B model was trained for 30$, learning to develop reasoning capabilities on its own.

These breakthroughs exploit Chain of Thought (CoT), where models learn to output text as intermediate reasoning steps, similar to how some of us humans think using an inner monologue.

These models can step through the solution while talking to itself, essentially rubber ducking. The drawback is that the whole CoT has to fit in the context window. Could it also be limited by the fact that reasoning only happens through language, maybe this isn’t optimal for tasks where humans would call on spatial reasoning, intuition, morality or similar faculties?

Recently a new paper explores how to induce non-verbal reasoning by letting the reasoning steps happen in latent space. It demonstrates how a Recurrent Neural Network placed between the embedding and attention layers acts as a latent space equivalent of CoT. Instead of verbalizing the reasoning steps, it explores “reason” in the embedding space directly. My personal opinion is that this is a much richer form of reasoning, possibly allowing for more abstraction, and maybe even computation or a world model?

Implicit Reasoning in LLMs

I’d like to dive a little deeper into the paper above and add my own musings.

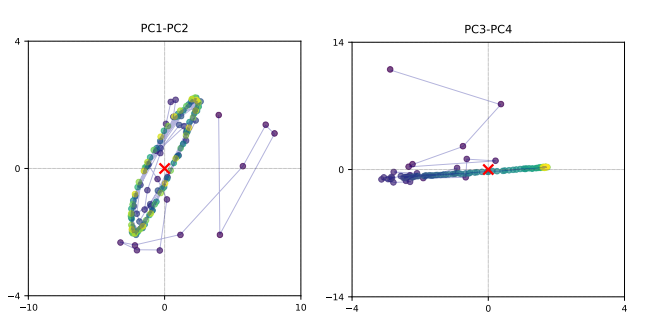

The innovation in the paper is a RNN with skip connections that steps through a chain of reasoning for each embedded token. The recurrence happens k times until convergence to a fixed point, so the model will decide when it’s done reasoning, and the length is different for each token.

I think it would be interesting to see this latent reasoning be explored in terms of Neural ODEs and Deep Equilibrium Networks.

The implicit function theorem tells us that such a fixed point iteration \(\lim_{n\rightarrow \infty} f_n \circ f_{n-1}\) can be described by one pass through another function \(f\). The “pre-iterated” RNN if you will. This is the basis for Deep Equilibrium Networks, and implies the (latent) chain of thought can be summarized by just one layer. No longer a chain of thought, maybe closer to intuition?

A RNN can be seen as a first order discretization of a Neural ODE. This technique could possibly allow for memory savings, avoiding the truncated backprop done in the paper. A drawback is that they can only represent smooth homeomorphisms, but can be remedied by introducing additional dimensions. This would essentially let solution paths cross over each other by utilizing an extra dimension. Adding extra dimensions sounds to me like you’d enrich the reasoning behaviour beyond concepts that could be described in words. You would have a whole separate embedding sub-space dedicated to pure non-verbal reasoning or maybe computation?

I’ve also been curious about introducing completely new tokens just for reasoning in CoT, and letting the model assign meaning to these through RL. I’m speculating this could let the model use these as computational building blocks for abstract reasoning.

Future

I’m keeping an eye out for more papers and models that show emergent reasoning properties of LLMs. To me, the progress in the past month has been some of the most interesting in AI so far, and I’m looking forward to what we’ll see later on in 2025.

I think this concept of latent reasoning will catch on, and possibly extended with other techniques.

I expect an even more intense focus on Reinforcement Learning given the success of R1.

I think LLMs will learn to compute and reason non-verbally in ways that will let equal or smaller sized models beat the current SOTA on benchmarks like ARC-AGI.

AGI in 2025? My guess is No.